Applications grow. At least successful applications. At some point your growing application will require a loadbalancer. It may be for scaling or availability purpose or something else. The day your application runs behind the loadbalancer first time will make you proud. And it will make you ask, how can I control what the loadbalancer thinks of my application availability? This is how.

The question we are talking about is: “how does the loadbalancer detects if my application is ok?”. But why does the loadbalancer care? Well, for several reasons.

A loadbalanced application consists of several nodes. In most cases every node will be some kind of web/application server, like apache httpd, nginx, tomcat or jboss. Nodes can fail. They can fail due to bugs, underlying system failure, overload or a drunken admin executing killall java in a wrong shell. Nodes can also be unavailable on purpose, for example if they are updated or simply not needed due low traffic periods. In any case the loadbalancer should know which nodes are ok and send users to healthy nodes only. Ideally a loadbalancer should also know how every node performs and try to balance the load equally, but in reality this never happen. The most frequent used load balancing strategy is a round-robin request distribution with some kind of session stickiness (Session stickiness means that the same users lands on the same node with every request. This is very useful for caching etc). So the loadbalancer needs to know if the node is ok or not, but how?

If you haven’t ask yourself this question before you started to use the loadbalancer, you will probably end up with some kind of builtin failure detection by the loadbalancer. Something like “as long as I get a 200 everything is ok, but if I get a 500 this node is dead”. This sounds logical at first sight… but only at first sight. Problem is: applications tend to return a http 500 error or no answer at all on regular base, without being really unavailable for all users. Consider following hypothetical situation. A user has somehow willingly or unwillingly forged a request, that leads to a 500 page (which is really not a big deal in most applications). As most users he’s impatient and clicks refresh often. Every request, he sends to your servers, leads to an error 500. The loadbalancer sees the errors and assumes, that the node is faulty and takes it offline. Not the next request from this user will land on next node, and the game goes on. This particular nasty user could take offline a 10 node application within minutes by just clicking F5. (Don’t laugh, I have actually seen this).

To prevent this kind of scenario you want to take control into your own hands. There are multiple ways how to do it. Lets start by the simplest one.

But before we dig into, a note: all code examples are located on github under https://github.com/anotheria/loadbalancercontrol as buildable and executable java applications. The below listings are missing some details for readability.

Simple Filter

The easiest way to control a loadbalancer is to force him to check a specific url every couple of seconds to detect if the application is available. Any url will do, but I prefer having a Servlet Filter do the job. This way, I am ensured that the Java VM hasn’t give up yet, and there is some life in my app.

@WebFilter(urlPatterns = "/loadbalancerSimpleFilter")

public class LoadbalancerControlFilter implements Filter {

@Override

public void doFilter(ServletRequest servletRequest, ServletResponse servletResponse, FilterChain filterChain) throws IOException, ServletException {

OutputStream outputStream = servletResponse.getOutputStream();

outputStream.write("UP".getBytes());

outputStream.close();

}

}

Now all you need is to point your load balancers to ip:port/loadbalancerSimpleFilter every couple of seconds to check every nodes availability. Ideally it should also check if the response is really ‘UP‘.

Configured behaviour

As easy to implement the above method is, as fast it comes to its limits. Back in the days I used to work for a that-time german internet heavy-weight. Their portal had serious traffic (at least serious for that time) and we needed the application to pre-warm caches after each release. During this short time we didn’t want any user-traffic to be on the site, because:

- every request would be very slow without caches

- the additional load on the database would make the cache warmup even slower

What we did was controlling what the application actually returned to the loadbalancer, and open the doors for public only when ready. To do so we used a configured filter. In particular we used the ConfigureMe framework. The filter now looks a little bit more advanced:

@WebFilter(urlPatterns = "/loadbalancerConfiguredFilter")

public class ConfigurableLoadbalancerControlFilter implements Filter {

private LoadbalancerConfig config = new LoadbalancerConfig();

@Override

public void init(FilterConfig filterConfig) throws ServletException {

ConfigurationManager.INSTANCE.configure(config);

}

@Override

public void doFilter(ServletRequest servletRequest, ServletResponse servletResponse, FilterChain filterChain) throws IOException, ServletException {

String retValue = config.isSiteAvailable() ?

"UP" : "DOWN";

OutputStream outputStream = servletResponse.getOutputStream();

outputStream.write(retValue.getBytes());

outputStream.close();

}

}

The LoadbalancerConfig class is a simple ConfigureMe POJO:

@ConfigureMe (watch = true, name = "loadbalancer")

public class LoadbalancerConfig {

@Configure

private boolean siteAvailable = false;

public boolean isSiteAvailable() {

return siteAvailable;

}

public void setSiteAvailable(boolean siteAvailable) {

this.siteAvailable = siteAvailable;

}

}

At the start of the application the filter was loaded. The filter will then ask ConfigureMe to configure the LoadbalancerConfig from the appropriate file, called loadbalancer.json:

{

"siteAvailable": false

}

We released and delivered the app to the nodes, with the siteAvailable flag set to false. This meant that the application would start unavailable and the loadbalancer would always receive DOWN as response. It would then send a user to another node or a maintenance page if no other node was there. Once the caches were warmed up and everything else set, we had a script, that would roll out a new version of loadbalancer.json to the nodes we wanted:

{

"siteAvailable": true

}

ConfigureMe watches configuration files for changes every 10 seconds. Once it detects a change it reconfigures the corresponding Java object on the fly. So max. 10 seconds after we executed the script, the loadbalancer began to ‘see’ UP-responses and started to send the traffic to our nodes. We also used same mechanism for system shutdown, cutting it off the loadbalancer first, and allowing tomcat to finish requests they were processing, before switching them off.

Health-based behaviour.

The configured behaviour is a huge improvement compared to the simple filter, but it has one drawback, it is configured and changed by the human. But how does a human know if the system performs. Surely, he can make tests every second, but at some point he’ll want to get some sleep or a cup of coffee, and, honestly, testing a system every second is not a funny job description. But thankfully there are tools – called APM – which are able to monitor and detect the health of the application. Of course our example will be based on MoSKito, but any APM will do.

Again, lets start with the new filter implementation:

@WebFilter(urlPatterns = "/loadbalancerHealthbasedFilter")

public class HealthbasedLoadbalancerControlFilter implements Filter {

/**

* Instance of the threshold repository for lookup of the underlying status and thresholds.

*/

private ThresholdRepository repository;

@Override

public void init(FilterConfig filterConfig) throws ServletException {

repository = ThresholdRepository.getInstance();

}

@Override

public void doFilter(ServletRequest servletRequest, ServletResponse servletResponse, FilterChain filterChain) throws IOException, ServletException {

ThresholdStatus status = repository.getWorstStatus();

String retValue = null;

switch(status){

case GREEN:

case YELLOW:

case ORANGE:

retValue = "UP";

break;

case RED:

case PURPLE:

case OFF:

default:

retValue = "DOWN";

}

OutputStream outputStream = servletResponse.getOutputStream();

outputStream.write(retValue.getBytes());

outputStream.close();

}

}

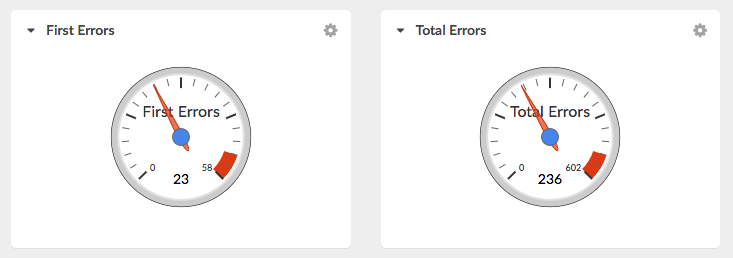

Whenever a new request for the application status comes in, the filter will look for the applications status, which in MoSKito is done by querying the threshold repository:

ThresholdStatus status = repository.getWorstStatus();

This will return the current system’s colour. MoSKito defines the system state by colours from GREEN to PURPLE. In this particular example we decided that if the system is in RED or PURPLE state, it should should report itself DOWN to the loadbalancer. Same applied shortly after system start, when the system had no state yet (state=OFF). The switch in the above code handles the decision whether the loadbalancer gets a DOWN or an UP.

If we know our system and configure the thresholds properly, the loadbalancer will be able to take load away from overloaded nodes and move it to less occupied nodes, thus giving the overloaded nodes time to recover.

Of course reality is more complicated as this simple examples. But it is possible with the above techniques to create a very sensitive control of a loadbalancer. And maybe you’ll find another, more sensitive way to influence your loadbalancer’s ‘thinking’. In this case please come back and share!